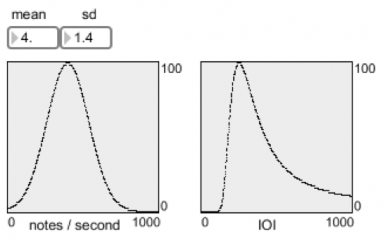

My work with Anders Friberg on extending Temperley’s probabilistic model of melody perception inrealtime generative music is moving forwards again. We had earlier implemented the melody generation, and I included it in the max/msp/jitter patch I regularly use for performances – check out my performance at 7th Mostra Sonora i Visual – as you can see/hear, it provides lots of flexibility for performancel. We are extending the technique to include inter-onset-intervals (IOI), durations, rhythm adherence and tonal beat strength. Being mostly vector processing I have opted for doing the code in jitter, and it works quite well.

Modulation is very flexible as we do morphing from tonal histograms A to B across the common, weighted set of AB as mid-point. Interesting to think about A and B can be very different histograms. While they may represent different tonalities (C major and F minor), they may also represent melody profiles of collections of melodies such as German and French folktunes respectively – or Modugno and Brel, or Mahler and Sibelius, or Dylan and Lady Gaga! Not that the software produces melodies similar to these authors, but it will over time have the same, simple statistical characteristics of the structure they are derived from.

Modulation is very flexible as we do morphing from tonal histograms A to B across the common, weighted set of AB as mid-point. Interesting to think about A and B can be very different histograms. While they may represent different tonalities (C major and F minor), they may also represent melody profiles of collections of melodies such as German and French folktunes respectively – or Modugno and Brel, or Mahler and Sibelius, or Dylan and Lady Gaga! Not that the software produces melodies similar to these authors, but it will over time have the same, simple statistical characteristics of the structure they are derived from.