This is a thorny topic in contemporary academic music, but it is the turning-point of my residency at NYU Steinhardt that begins coming October. The aim is to develop a number of compositions over a two-year period that use systematic procedures in the composition process to address emotion content as well as musical structures usually treated in score composing. There may also be some realtime musician/computer interaction involved.

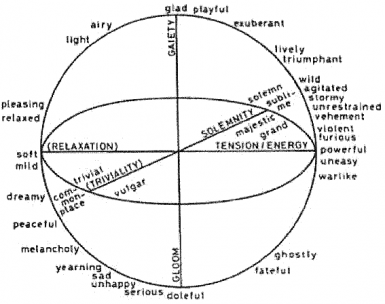

from L. Wedin (1972)

Present-day composition is an expert-based activity, which is very precise in its selection, transformation, and distribution of a material set. But composition methods do not address the music’s emotional content. This requires a method that includes non-expert aspects of emotion in a formalized way. Over the past years I have become increasingly attentive to structuring music from a perspective that includes a wider understanding than what score notation offers. The reason is that music, while being expert based, also is non-expert founded in its appreciation mechanisms. To a non-expert listener, the acoustic surface of a piece of music is all that matters. Such listeners often agree to a very high degree about what type of emotion is expressed in music, in spite of that they obviously have no formal knowledge of music’s structural elements such as notes, chords and rhythms.

Many music analysis procedures require note-level representation, but this musical meta-data does not describe human music perception: there are no notes in the brain. In many circumstances we hear groups of notes – chords – as single objects, and it can be quite difficult to identify whether or not a particular pitch has been heard in a chord. Furthermore, notation is not necessary for classification of music by genre, identification of musical instruments, or segmentation of music into sections.

If musical notation is not a prerequisite for music understanding, then music appreciation must be addressed from other angles than the academic, score-based tradition. Psychoacoustics and perceptually-motivated auditory scene analysis are such view-points towards a wider understanding of music appreciation. And acoustics and signal processing often highlight the constraints that perceptual systems rely upon, meaning they could be useful places to look for features relevant to music-understanding.

The challenge in integrating composition and emotion is establishing a methodology that permits its consolidation as formalized compositional procedures. But the last ten years’ groving understanding of the meaning, function, and importance of emotion over a vast range of human activities, has provided a new perspective on emotion as a fundamental aspect of human conduct in any environment. Today it is both possible and desirable to systematically integrate emotion rendering into music composition.