Software

Babilu

Volati graphics and music are

generated in

real time during the performance. Graphics are entirely created from

the speech analysis performed on the singer's voice. The music is

influenced both by the voical input and by a feedback from the visuals,

and is continuously under the control of the music composer/performer.

Babilu

Volati graphics and music are

generated in

real time during the performance. Graphics are entirely created from

the speech analysis performed on the singer's voice. The music is

influenced both by the voical input and by a feedback from the visuals,

and is continuously under the control of the music composer/performer.Construction rules

Following the phonem detection, the physics

simulation engine controls the behavior of the particles of phonem (the

phonemons) and let them interact freely a little while before

initiating the stabilization phase. For the first phase of free motion,

particles are provided with pseudo-physical attributes like speed and

mass according to the signal processing performed on the voice

(duration becomes mass, amplitude becomes mass, etc.). In the second

phase of stabilization, the type of the particles (recognized as the

phonem pronouced) influences the distance between the particles.

Eventually, when all particles are fixed, a shape emerges from the

cloud of particles; this is what we call a 'bloc'.

The shape of a bloc is therefore exactly directed by the sequence of phonems pronounced (see this paper for more details).

The shape of a bloc is therefore exactly directed by the sequence of phonems pronounced (see this paper for more details).

Generation of a new 3D world at each performance

As the singer's performance varies for each

representation, the speech interface will provide (slightly) different

inputs to the graphics generator. There may be little modifications in

the pronounciation (prosody, amplitude of the voice) which do not

change fundamentally the generation of particles, but there will

certainly be some larger variations which will lead to visible and

structural changes in the construction of blocs.

This means that each performance is unique and prepresented in a unique way in the 3D shapes generated. As these 3D models are saved after each representation, we are able to build a collection of shapes for each concert (get the OSG 3D models in the Download section).

This means that each performance is unique and prepresented in a unique way in the 3D shapes generated. As these 3D models are saved after each representation, we are able to build a collection of shapes for each concert (get the OSG 3D models in the Download section).

Technicalities

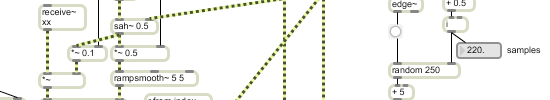

Electronic music

The electronic music is created generically, leaving sufficient room for improvisation and intervention on part of the electronic performer. Timing is sample accurate to maximize the rhythmic impact when called for, and everything is coded in MAX/MSP/Jitter with lots of personal twists on the well-known techniques of frequency/phase modulation, sample manipulation, spatialization, spectrum shaping in frequency and time domain, etc. The audio input – the voice – is allowed to affect the electronic output to varying degree at different moments, and snippets of code are engaged so as to sway and cast the piece’s overall shape.

Computer graphics

3D graphics are rendered with OpenGL and optimized with culling and state sorting techniques thanks to the Open Scene Graph library. All is developed in C++ and uses multiple threads (physics and network computations running in parallel to rendering) for best efficiency. Some of the visual effects are obtained by direct GPU programming in the OpenGL Shading Language (GLSL). The program can display and animate hundreds of particles and 3D shapes at more than 60fps.

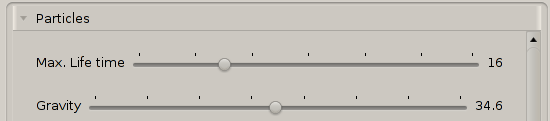

System architecture

The system requires three computers; a Mac for music and sound processing (Powerbook), a desktop PC to render the graphics, and a PC laptop to control them. They are connected to a local network and exchange data through UDP (e.g. the phonemes detected on the mac are sent to the 3D program as a packet). The behavior of the graphics can be controlled at runtime by modifying 18 parameters of the physics engine from a small dedicated graphical user interface (Qt application shown above).